Choosing the proper AI translation stack is becoming a core part of how modern websites scale across languages. With machine translation engines, LLM-powered systems, and varying levels of human review all available, teams now face a new challenge, figuring out the right mix for each type of content, rather than picking a single tool and hoping it works for everything.

As websites expand into more markets, the pressure to deliver fast, accurate, and brand-safe translations continues to increase. If you want a clear roadmap to designing a translation stack that’s truly efficient and scalable, keep reading, this guide breaks it all down step by step.

The new AI Translation landscape

AI translation is evolving faster than ever, and each option—MT engines, LLMs, and hybrid workflows—comes with its own strengths and limitations. Understanding these differences is the first step toward building the right translation strategy for your website.

MT engines

Machine Translation (MT) engines like Google Translate, DeepL, and Microsoft Translator have long been the backbone of automated translation. They rely on highly optimized models built for speed and consistency, making them ideal for handling large volumes of content. Because they’re trained on massive multilingual datasets, MT engines tend to deliver predictable, stable results that are easy to control.

The most significant advantage of MT engines is efficiency. When you need to translate thousands of product pages or articles quickly, MT delivers fast results at a low cost. However, because they operate in a more rigid, pattern-based way, MT engines can struggle with nuanced language, tone, or context-heavy content. That’s where more flexible technologies like LLMs start to shine.

LLM-based translation

Large Language Models such as GPT or Claude provide a much more contextual understanding of language. Instead of simply converting words from one language to another, they interpret meaning, tone, and structure. This leads to translations that feel more natural, more human-like, and better suited for creative or nuanced content.

LLMs also excel at following detailed instructions—maintaining brand tone, avoiding specific terms, or adapting to a particular writing style. However, their flexibility can sometimes lead to inconsistency if the model isn’t guided with explicit constraints or style rules. LLMs can also be more expensive to run at scale than traditional MT engines, especially on high-volume websites.

Hybrid MT + LLM workflows

A hybrid approach combines the speed of MT with the contextual intelligence of LLMs, creating a balanced workflow that maximizes quality while controlling cost. Typically, MT is used as the first pass to generate a fast, affordable draft, and an LLM refines the text by improving tone, consistency, or semantic accuracy.

This workflow has become a popular choice for websites with large content libraries that still require a higher level of quality, such as blogs, product documentation, or landing pages. Hybrid setups also offer greater flexibility, enabling teams to apply different rules based on content type, language, or the business value of specific pages.

Mapping content types to the right translation method

Not every page on a website needs the same level of translation quality. Some content can rely entirely on AI, while others require human review or even full human editing due to legal, brand, or UX sensitivities. Choosing the proper method for each content type helps you balance quality, cost, and speed without overinvesting where it’s not needed.

AI translation only

AI-only translation works best for large volumes of low-risk, informational content where perfect wording isn’t mission-critical. Examples include long-tail product descriptions, blog archives, FAQ databases, and support articles with straightforward instructions. In these cases, consistency and speed matter more than style, and MT or LLM output is usually more than sufficient.

Using AI-only for scalable content helps teams translate thousands of pages at a fraction of the cost while keeping updates fast. However, this method should be used for content where the business impact of minor inaccuracies is low. As long as the text is clear, functionally correct, and easy to understand, AI-only translation provides a strong ROI without requiring human intervention.

AI + light human review

A light human review is ideal for mid-tier content where clarity, tone, and brand perception matter but the stakes aren’t as high as legal or marketing-critical pages. This category typically includes product pages, recent blog articles, onboarding guides, UX microcopy, and key support documentation. These materials often need minor tweaks to their terminology, formatting, or tone to feel polished and brand-aligned.

In this workflow, AI handles the bulk of the translation, while a reviewer makes quick adjustments to ensure accuracy and improve readability. It balances cost and quality by avoiding a complete rewrite while still catching errors that automated systems might miss. This method is beneficial for pages that influence user experience or purchasing decisions but don’t require the precision of full compliance.

AI + full human editing

Complete post-human editing is reserved for content with high legal, financial, or brand risk—pages where nuance, precision, and cultural alignment are critical. This includes legal documents, terms & conditions, marketing landing pages, campaigns, UX copy for core funnels, and high-stakes support content such as refund policies and safety instructions. In these cases, even minor errors can lead to compliance issues or damage user trust.

In this workflow, AI provides an initial draft, but a professional editor rewrites, restructures, and verifies the translation to ensure accuracy, tone, and cultural fit. The goal is to protect brand credibility and guarantee that every detail is communicated perfectly across languages.

While full human editing is essential for this category, Linguise AI Translation helps reduce editor workload by producing a cleaner, more context-aware first draft, especially when using its premium AI refinement mode for selected high-value URLs. This means editors spend less time fixing basic errors and more time perfecting the final message.

Designing a technical AI translation for websites

Building an effective AI translation stack isn’t just about choosing one engine—it’s about designing a flexible system that adapts to different languages, content types, and quality needs. A well-structured technical setup ensures consistent output, reduces manual work, and keeps translation operations scalable as your website grows.

Using multiple engines across languages

No single translation engine performs best across every language. Some MT engines excel at European languages, while others perform better with Asian or low-resource languages. By combining multiple engines, you can route each language to the provider that delivers the highest accuracy and most natural tone.

In many setups, this means using DeepL for languages like French or German, Google Translate for broad global coverage, and an LLM-based model where deeper contextual understanding is needed. This multi-engine strategy ensures every translation benefits from the strengths of the underlying technology.

Linguise AI Translation make this even easier by automatically selecting the most suitable engine for each language and applying its advanced LLM refinement only when needed. Instead of manually managing dozens of configurations, Linguise intelligently balances Cloud AI speed with high-precision AI translation for complex languages or sensitive content. This gives teams greater control when expanding into new regions—fine-tuning quality without rebuilding their entire workflow.

Fallback logic when primary engines fail

Even the best translation engines can produce unreliable output for specific sentence structures, idioms, or domain-specific terminology. Fallback logic prevents quality degradation by automatically switching to a secondary engine or LLM when the primary engine or LLM fails or produces low-confidence results.

This kind of failsafe is crucial for maintaining consistency across large websites. Instead of relying on manual checks, the system can detect issues, such as missing context, unnatural phrasing, or incomplete sentences, and trigger an alternative engine to produce better results. With fallback logic, teams reduce risk, improve reliability, and ensure that errors don’t slip through at scale.

Rule-based control

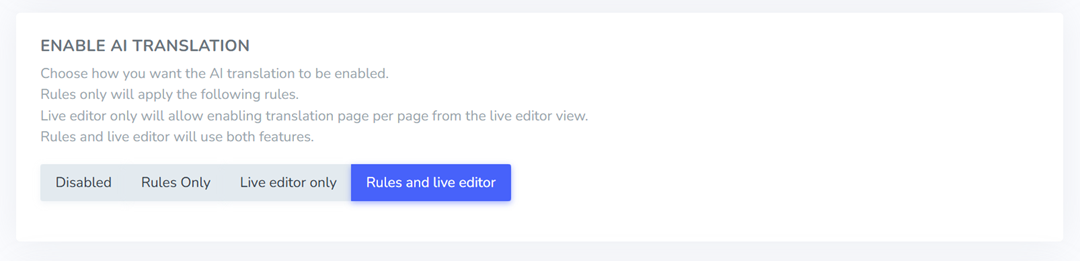

Rule-based control allows you to define how translation engines behave based on page type, language, section, or business priority. For example, you might enforce LLM refinement only on high-value landing pages, use MT-only for long-tail blog posts, or apply stricter terminology rules for product catalogs. This helps ensure each part of your website receives the right level of translation quality without unnecessary cost.

These rules act as a governance layer that keeps translations aligned with brand tone and compliance standards. Instead of treating all pages equally, you can create granular logic that optimizes cost, speed, and quality. Your translation workflow becomes adaptive—automating simple pages and reserving more advanced processing for content that directly impacts conversions or user trust.

This is where Linguise AI Translation provides a strong advantage. Linguise allows deep granular control, letting you define exactly which URLs, languages, or content types should use premium AI refinement versus standard Cloud AI translation. For large websites with thousands of mixed-value pages, this precision means you can prioritize critical funnels while keeping long-tail translation fast and cost-efficient—without building complex rules manually.

Managing long-tail content efficiently

Long-tail content—such as old blog posts, minor documentation pages, or thousands of product variations—makes up a large portion of most websites. Translating this manually or with heavy LLM processing would be too expensive and time-consuming. That’s why automation plays a key role here.

Using MT engines or hybrid MT+LLM with minimal human intervention allows you to translate long-tail pages quickly while maintaining functional accuracy. You can also apply batch updates, automated glossary checks, and periodic quality sampling to ensure these pages remain consistent over time. By optimizing long-tail content with scalable automation, your team can focus human effort where it matters most—on high-impact pages that influence conversions and brand trust.

Governance and quality operations

As your translation system grows, governance becomes the backbone that keeps everything consistent. Without clear rules, terminology standards, and quality checks, even the best AI setup can drift over time. Strong governance ensures every translation—whether MT, LLM, or hybrid—stays aligned with your brand and meets the level of accuracy your users expect.

Style guides and glossaries

Style guides and glossaries give AI systems the structure they need to produce consistent translations. A glossary ensures that key terms—such as product names, technical jargon, or brand-specific phrases—are translated the same way across all languages. Meanwhile, a style guide provides direction on tone, formality, punctuation, and regional preferences.

By defining these rules upfront, you reduce the risk of inconsistencies and minimize the amount of human correction needed later. This is especially important for large websites with diverse content types, where even slight variations in terminology or tone can confuse users. A clear, well-maintained set of linguistic guidelines helps both AI systems and human reviewers stay aligned.

QA loops

Quality Assurance (QA) loops are continuous checkpoints that help catch errors and improve outputs over time. They typically involve automated quality detection, LLM-based self-review, and human sampling to evaluate accuracy, terminology use, and contextual correctness. Instead of relying on one-time reviews, QA loops introduce ongoing monitoring that evolves with your content.

These loops also provide valuable feedback to improve your translation stack. When recurring issues are detected—whether from an MT engine or an LLM—you can refine rules, update glossaries, or adjust engine settings. Over time, this process creates a self-improving system where both machines and humans learn from past outputs, keeping quality stable as your content library grows.

Maintaining brand voice

Brand voice is one of the most complex elements for AI to get right because it goes beyond literal meaning, it reflects personality, tone, and emotional intent. Maintaining a consistent voice across languages requires clear instructions, strong style guidelines, and, for critical pages such as marketing campaigns or landing pages, human oversight.

LLMs are particularly helpful here because they can adapt style and tone more naturally than traditional MT engines. However, they still need direction to stay aligned. By combining brand rules, curated examples, and periodic human checks for key content areas, you ensure that your brand feels unified across every region. This consistency builds trust and strengthens user experience in every language.

Measurement for continuous optimization

A translation stack is never “finished.” To keep quality high and costs under control, teams need ongoing measurement, tracking performance, identifying gaps, and improving the system over time. With the right metrics, you can quickly see what’s working, what needs adjustment, and where to reinvest resources for the biggest impact.

Tracking cost & speed

Cost and speed are two of the most important metrics in any translation operation. By tracking cost per word, per language, or per engine, teams can understand exactly where their budget is going and identify opportunities to optimize. This becomes especially useful when using multiple engines or adding human reviewers, as each workflow has different cost implications.

Speed is equally important, fast translation cycles allow you to publish updates quickly, support more languages, and stay competitive in global markets. Monitoring turnaround time helps you identify bottlenecks across AI processing, human review, and content workflows. With good visibility, you can adjust your tech stack or rules to improve delivery times without reducing quality.

Quality scoring

Quality scoring provides a structured way to evaluate how well your AI translation workflows are performing. This can include error categories like terminology mistakes, grammar issues, tone mismatches, or missing context. By assigning scores consistently across languages and pages, you can identify patterns—such as which engines perform better for certain domains or where glossaries need improvement.

Over time, these scores help guide decisions about when to switch engines, introduce human review, or adjust rules. They also ensure quality remains consistent as you expand into new markets or increase translation volume. The goal isn’t perfection on every page, but consistent performance aligned with the needs of each content type.

Continuous feedback loop

A continuous feedback loop ties everything together. It means collecting input from automated QA, human reviewers, customer support, and even end-users to refine the system. When issues appear repeatedly—wrong terminology, awkward phrasing, cultural mismatches—you can feed those insights back into the translation stack through updated glossaries, adjusted rules, or improved prompts.

This loop ensures that your translation system becomes smarter and more efficient over time rather than remaining static. It also helps ensure that AI outputs stay aligned with your evolving brand, products, and markets. With continuous improvement built into your workflow, you can maintain high-quality translations even as your website grows and languages multiply.

Conclusion

Choosing an AI translation has become essential for websites expanding into multiple languages, and the key to success lies in selecting the right mix of MT engines, LLM refinement, and human involvement. By matching each content type with the appropriate translation method and designing a flexible, rule-driven technical setup, teams can balance quality, cost, and speed without unnecessary complexity.

Optimizing your AI translation stack is an ongoing effort rather than a one-time setup. If you want a solution that offers hybrid AI, granular per-URL control, and premium-quality refinement without the operational overhead, consider trying Linguise AI Translation, a streamlined way to produce reliable, high-quality multilingual content at scale.